✍️ Prompt Engineering LLMs

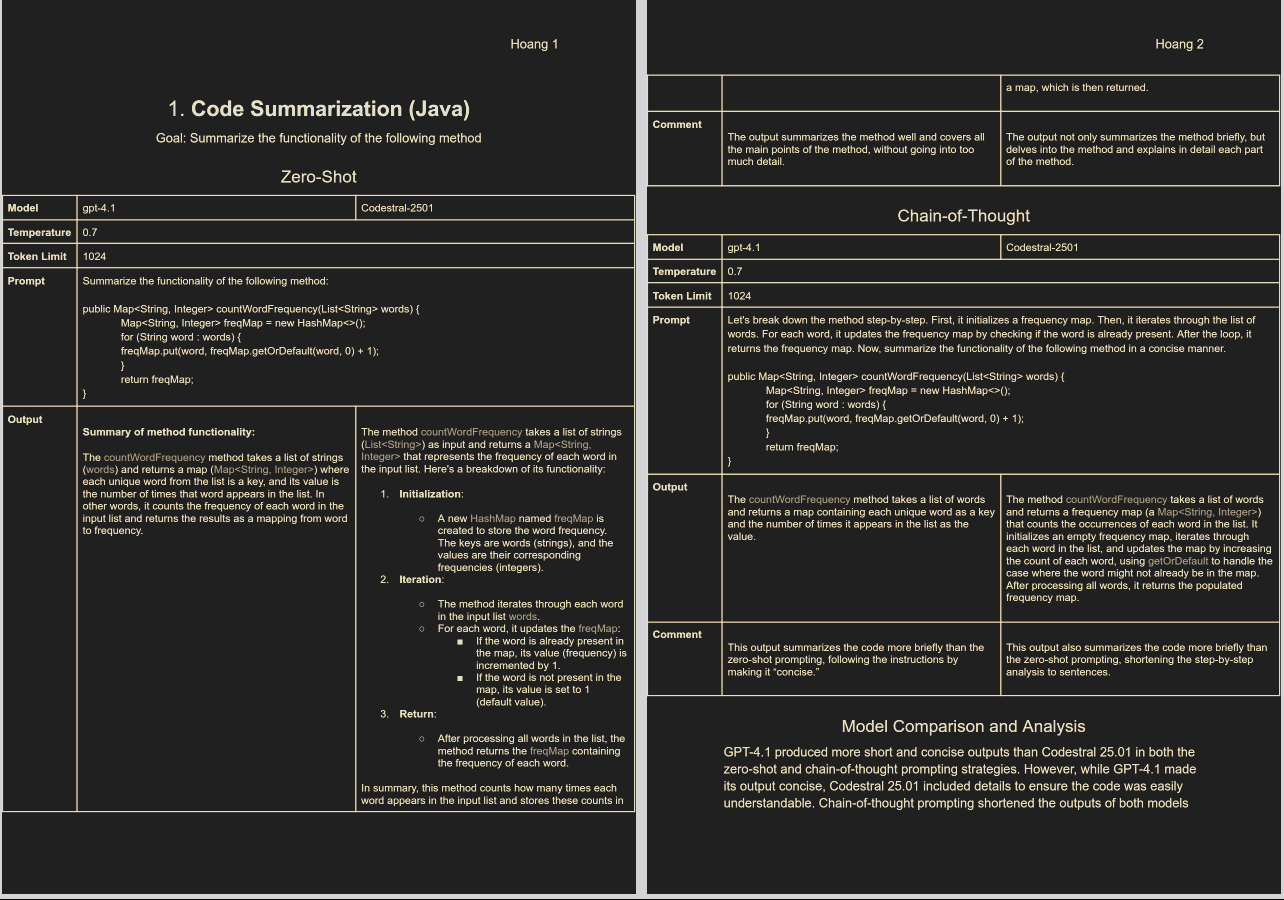

Figure 1: An analysis ofChatGPT-4.1andCodestral 25.01using the Code Summarization prompting strategy.

This project is about Prompt Engineering for In-Context Learning that investigates the impact of different prompt designs on the performance of Large Language Models (LLMs) across a variety of software engineering tasks.

In this assignment, five prompting strategies—zero-shot, few-shot, chain-of-thought, prompt-chaining, and self-consistency—were applied to 22 tasks, including code summarization, bug fixing, API generation, and code translation.

Experiments compared four models—gpt-4.1, Codestral-2501, gpt-4.1-mini, and gpt-4.1-nano—to demonstrate how the strategic use of prompt examples and structured reasoning influences the quality and clarity of generated code.

Self-consistency prompting employed 3 repetitions, with a temperature setting of 0.7 and a maximum token limit of 1024 tokens across all evaluations.