Automatically Discovering, Reporting and Reproducing Android Application Crashes - ICST 2016 Online Appendix

This web page is a companion to our ICST 2016 paper entitled "Automatically Discovering, Reporting and Reproducing Android Application Crashes".

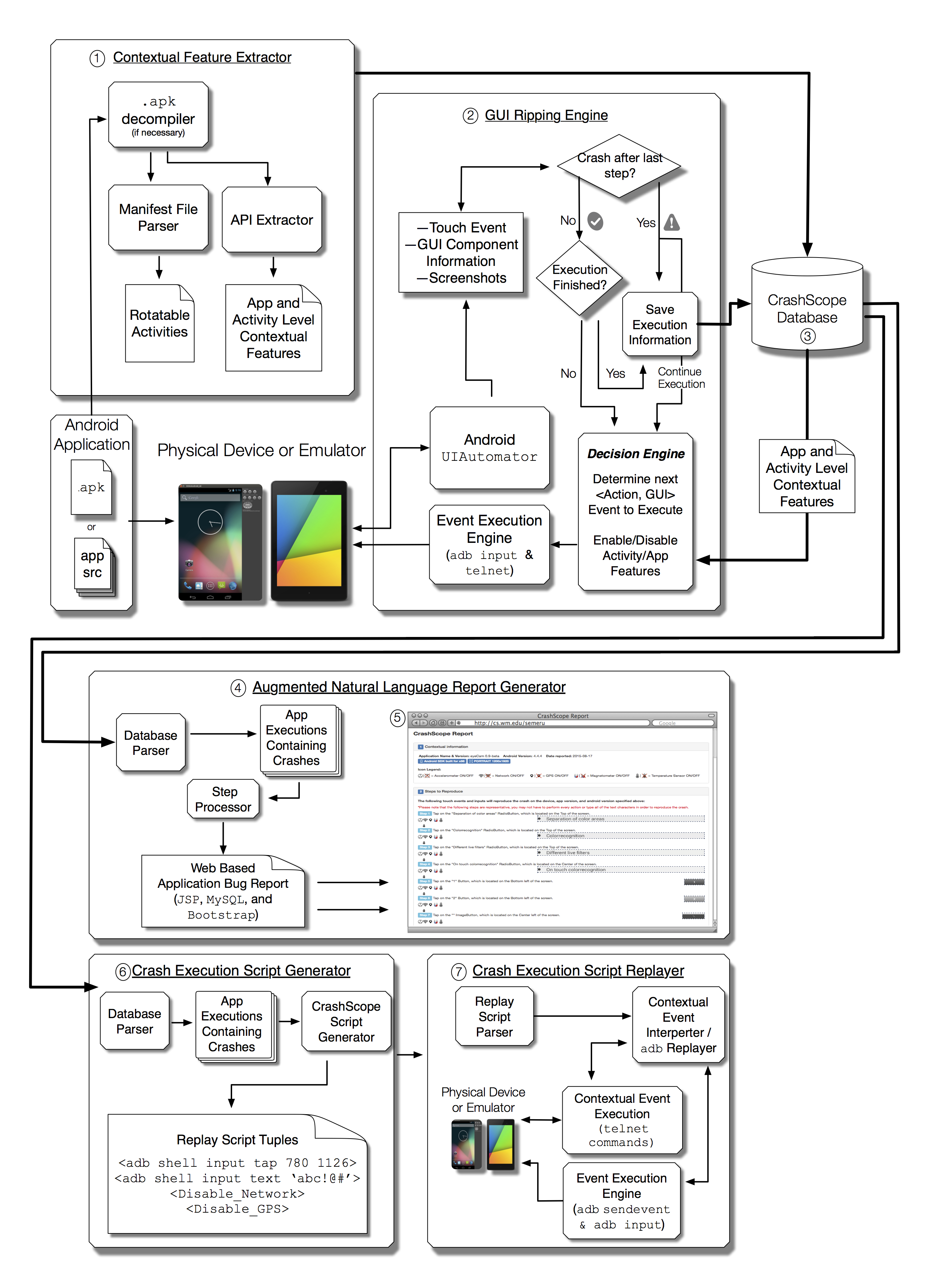

1. Framework: CrashScope

> Overview of CrashScope

*Image available also as a PDF

> Tools

Tools used in the contextual feature extraction are:Tools used in the GUI ripping engine are:

Tools used in the report generation engine are:

2. Overview of Features in Automated Testing Tools

| Tool | Instru-mentation | Type of GUI Exploration | Types of Events | Crash Resilient | Replayable Test Cases | NL Crash Reports | Emulators, Devices |

| Dynodroid | Yes | Guided/ Random | System, GUI, Text | Yes | No | No | No |

| EvoDroid | No | System/Evo | GUI | No | No | No | N/A |

| AndroidRipper | Yes | Systematic | GUI, Text | No | No | No | N/A |

| MobiGUItar | Yes | Model-Based | GUI, Text | No | Yes | No | N/A |

| A3E Depth-First | Yes | Systematic | GUI | No | No | No | Yes |

| A3E Targeted | Yes | Model-Based | GUI | No | No | No | Yes |

| Swifthand | Yes | Model-Based | GUI, Text | N/A | No | No | Yes |

| PUMA | Yes | Programmable | System, GUI, Text | N/A | No | No | Yes |

| ACTEve | Yes | Systematic | GUI | N/A | No | No | Yes |

| VANARSena | Yes | Random | System, GUI, Text | Yes | Yes | No | N/A |

| Thor | Yes | Test Cases | Test Case Events | N/A | N/A | No | No |

| QUANTUM | Yes | Model-Based | System, GUI | N/A | Yes | No | N/A |

| AppDoctor | Yes | Multiple | System, GUI, Text | Yes | Yes | No | N/A |

| ORBIT | No | Model-Based | GUI | N/A | No | No | N/A |

| SPAG-C | No | Record/Replay | GUI | N/A | N/A | No | No |

| JPF-Android | No | Scripting | GUI | N/A | Yes | No | N/A |

| MonkeyLab | No | Model-based | GUI, Text | No | Yes | No | Yes |

| CrashDroid | No | Manual Rec/Replay | GUI, Text | Manual | Yes | Yes | Yes |

| CrashScope | No | Systematic | GUI, Text, System | Yes | Yes | Yes | Yes |

3. Results

RQ1 - What is CrashScope’s effectiveness in terms of detecting application crashes compared to other state-of-the-art Android testing approaches?

- CrashScope is about as effective at detecting crashes as the other tools. Furthermore, our approach reduces the burden on developers by reducing the number of “false” crashes caused by instrumentation and providing detailed crash reports.

| App | A3E | GUI- Ripper | Dyno- droid | PUMA | Monkey (All) | Crash- Scope |

| A2DP Vol | 1 | 0 | 0 | 0 | 0 | 0 |

| aagtl | 0 | 0 | 1 | 0 | 1 | 0 |

| Amazed | 0 | 0 | 0 | 0 | 1 | 0 |

| HNDroid | 1 | 1 | 1 | 2 | 1 | 1 |

| BatteryDog | 0 | 0 | 1 | 0 | 1 | 0 |

| Soundboard | 0 | 1 | 0 | 0 | 0 | 0 |

| AKA | 0 | 0 | 0 | 0 | 1 | 0 |

| Bites | 0 | 0 | 0 | 0 | 1 | 0 |

| Yahtzee | 1 | 0 | 0 | 0 | 0 | 1 |

| ADSDroid | 1 | 1 | 1 | 1 | 1 | 1 |

| PassMaker | 1 | 0 | 0 | 0 | 1 | 1 |

| BlinkBattery | 0 | 0 | 0 | 0 | 1 | 0 |

| D&C | 0 | 0 | 0 | 0 | 1 | 0 |

| Photostream | 1 | 1 | 1 | 1 | 1 | 0 |

| AlarmKlock | 0 | 0 | 1 | 0 | 0 | 0 |

| Sanity | 1 | 1 | 0 | 0 | 0 | 0 |

| MyExpenses | 0 | 0 | 1 | 0 | 0 | 0 |

| Zooborns | 0 | 0 | 0 | 0 | 0 | 2 |

| ACal | 1 | 2 | 2 | 0 | 1 | 1 |

| Hotdeath | 0 | 2 | 0 | 0 | 0 | 1 |

| Total | 8 (21) | 9 (5) | 9 (6) | 4 (0) | 12 (1) | 8 (0) |

RQ2 - Does CrashScope detect different crashes compared to the other tools?

- The varying strategies of CrashScope allow the tool to detect different crashes compared to those detected by other approaches.

RQ3 - Are some CrashScope execution strategies more effective at detecting crashes or exceptions than others?

- Different combinations of CrashScope strategies were more effective than others, suggesting the need for multiple testing strategies encompassed within a single tool.

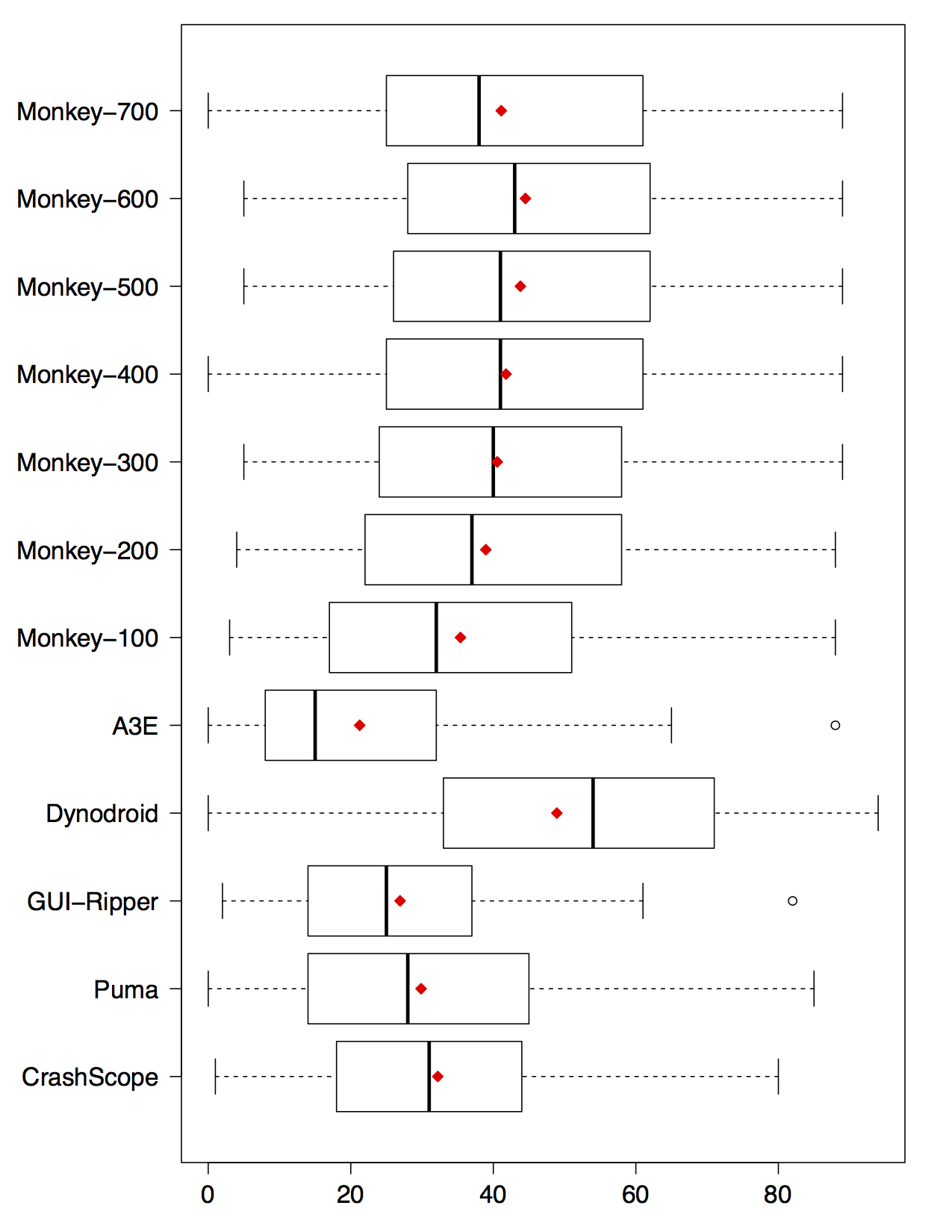

RQ4 - Does average application statement coverage correspond to a tool’s ability to detect crashes?

- Higher statement coverage of an automated mobile app testing tool does not necessarily imply that tool will have effective fault-discovery capabilities.

RQ5 - Are reports generated with CrashScope more reproducible than the original human written reports?

- Reports generated by CrashScope are about as reproducible as human written reports extracted from open-source issue trackers.

Examples of crash reports:

- Report 1 - BMI Calculator

- Report 2 - 31C3 Schedule

- Report 3 - ADSdroid

- Report 4 - AnagramSolver

- Report 5 - eyeCam

- Report 6 - GnuCash

- Report 7 - Olam

- Report 8 - Card Game Scores

RQ6 - Are reports generated by CrashScope more readable than the original human written reports?

- Reports generated by CrashScope are more readable and useful from a developers’ perspective as compared to human written reports.

4. Data

CrashScope Tool Comparison Study Data

- Here is the link to the data that contains all the coverage files, logcat files, and extracted exceptions files

Report Reproducibility and Readability User Study Data

- Here is the link to the user study data (includes links to CrashScope reports)

5. Authors

- Kevin Moran

- The College of William and Mary, VA, USA.

E-mail: kpmoran at cs dot wm

dot edu

- Mario Linares-Vásquez

- The College of William and Mary, VA, USA.

E-mail: mlinarev at cs dot wm

dot edu

- Carlos Bernal-Cárdenas

- The College of William and Mary, VA, USA.

E-mail: cebernal at cs dot wm

dot edu

- Christopher Vendome

- The College of William and Mary, VA, USA.

E-mail: cvendome at cs dot wm

dot edu

- Denys Poshyvanyk

- The College of William and Mary.

E-mail: denys at cs dot wm dot edu